-

Please try to be as accurate as possible with your search.

-

We can quote you on 1000s of specialist parts, even if they are not listed on our website.

-

We can't find any results for ŌĆ£ŌĆØ.

When a distributed control system (DCS) controller fails in the middle of a production run, theory goes out the window. At that moment you are staring at tripped loops, frozen trends, and a growing pile of lost orders. From the plant floor, urgent DCS controller repair is not a ŌĆ£nice to haveŌĆØ; it is the difference between a controlled, short interruption and an all-night crisis that ripples into the rest of the week.

In this article I will walk through how urgent DCS controller repair should work if it is done properly, what you can do before the next failure to slash recovery time, and how to choose a repair partner who actually understands high-availability process control rather than generic electronics. The guidance here is grounded in established best practices for DCS maintenance, cybersecurity, and troubleshooting from industry sources such as Automation Community, the International Society of Automation (ISA), and multiple specialist repair providers, combined with what actually works out on site when the alarms will not stop.

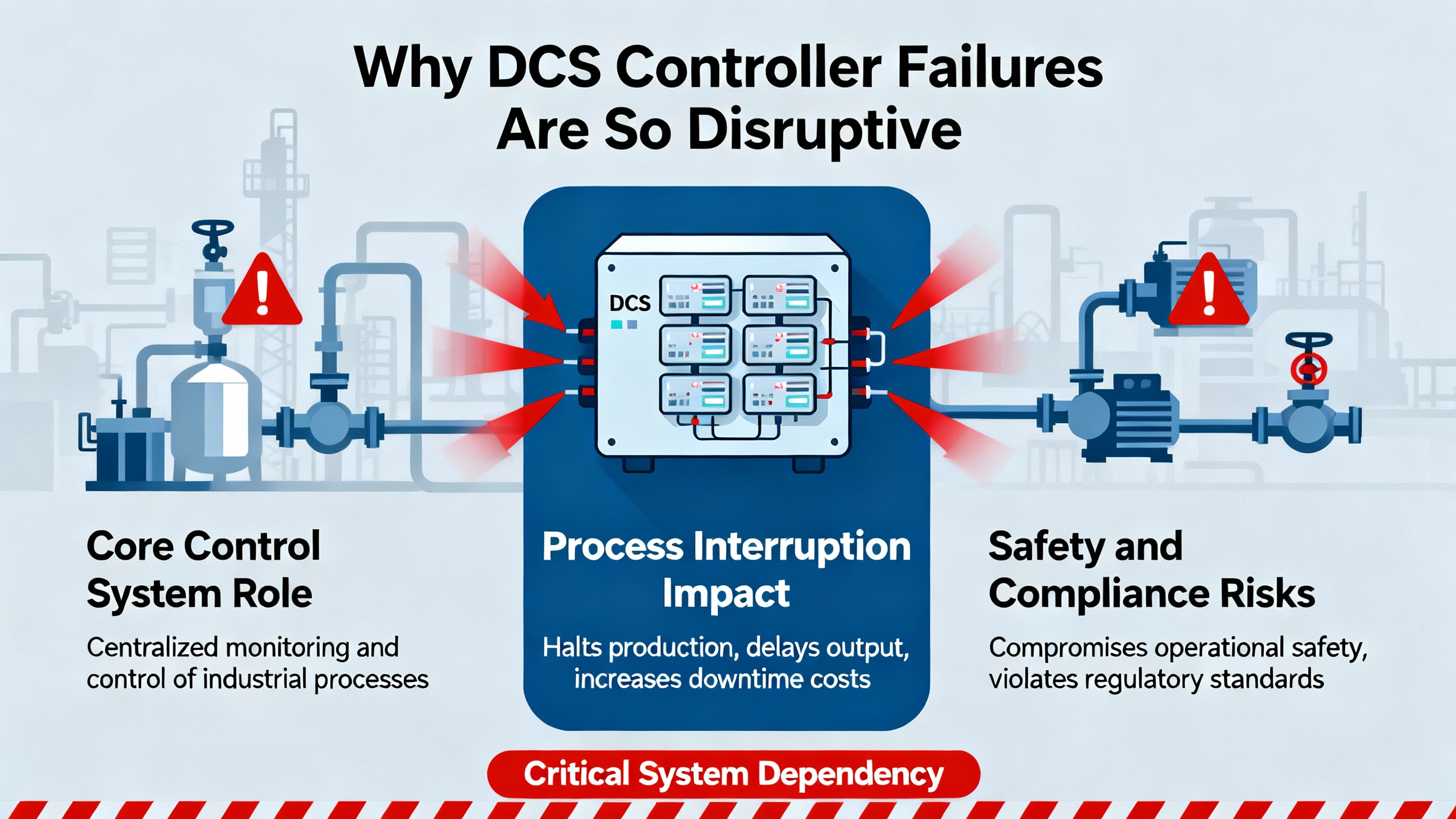

A DCS is not a single box; it is a control architecture that spreads logic across multiple controllers, I/O modules, networks, and operator stations to keep your process safe and stable. As described by Automation Community and other industrial references, this distributed structure is precisely what gives a DCS its reliability and flexibility for continuous processes in refineries, power plants, chemical units, and food and beverage lines.

The downside appears when a controller, I/O rack, or communication path at the heart of that architecture fails. Jiwei Automation points out that DCS hardware faults cluster in three critical areas: controllers, I/O modules, and networks. A failed controller can leave a whole group of loops in manual or fail-safe, while an I/O fault may make sensors disappear or push bad values into the strategy. Network faults can isolate parts of the plant so that the controller cannot see the field or the operator cannot see the controller. Even if you have redundancy, these events are rarely clean; you may still see nuisance alarms, strange latencies, or unstable control.

Complicating matters further, not every failure is purely hardware. IDS Power notes that software bugs, configuration mistakes, and data corruption in a DCS can trigger crashes, wrong setpoints, or misleading displays. Factor in environmental problems such as temperature, humidity, and electromagnetic interference, which Jiwei highlights as common contributors to instability, and you get a picture of why DCS failures can be messy to diagnose under time pressure.

Downtime from these failures is not just a number on a maintenance dashboard. It means interrupted batches, off-spec product in tanks, flaring or waste streams that strain permits, and in worst cases, safety risks for people in the plant. This is why seasoned operators treat DCS maintenance as a continuous program rather than a once-a-year chore and why an urgent repair capability is worth planning deliberately instead of improvising at two in the morning.

From a plant perspective, ŌĆ£urgent repairŌĆØ is a promise: someone will answer the call, understand your control system, and help you get back online within a timeline that matches the cost of your downtime. When you look at how reputable industrial electronics repair and service providers describe their offerings, several common patterns emerge.

Service organizations such as Kredit Automation & Controls and MDI Advantage emphasize true twenty-four seven availability, not just a voicemail box. Calls are answered by engineers or trained coordinators who can start troubleshooting on the phone, not by generic call centers. Kredit Automation & Controls commits to getting a technician on site within about four hours for critical cases, framing response time in hours instead of days. That is the right mindset for plants where a day of downtime may cost more than an annual service contract.

Companies like Industrial Control Solutions position urgent repair as time-critical work with two levers: extremely fast turnaround in the repair shop and aggressive parts support to get you the specific module you need inside your required window. They back this with free, detailed quotes, competitive pricing focused on avoiding full replacement, and a fixed twelve-month warranty on repaired units. Similar repair specialists such as NJT Automation and Schneider Electric Industrial Repair Services describe one-year warranties on DCS modules, full-load testing, and targeted focus on high-failure components like I/O and power supplies. AES goes further, offering a twenty-four month limited warranty on industrial control repairs and presenting repair as a cost-effective alternative to replacement, particularly when controllers or modules are expensive or already obsolete.

Remote support has become equally important. Kredit Automation & Controls and MDI Advantage both highlight remote diagnostics as a way to start troubleshooting in minutes rather than waiting for a truck roll. Industrial Control Solutions installs communication modules so that engineers can connect to the controls equipment while maintaining separation from the corporate network for security. This matches broader guidance from ISA cybersecurity experts that remote access must be designed so that technicians can reach the control assets they need without exposing the rest of the IT environment.

In short, a serious urgent DCS controller repair service combines constant availability, fast access to actual control engineers, remote diagnostic capability, full-load testing and documented repair processes, and clear warranties. Anything less is unlikely to minimize downtime for a high-value process.

From the outside, urgent repair can look like a magic black box: a van arrives, somebody pulls a controller, and the plant comes back up. In reality, the process is systematic and grounded in fault diagnosis methods described by Jiwei Automation, IDS Power, and many DCS optimization resources.

The first task is always to stabilize the process. Before touching any controller or I/O, an experienced engineer works with the control room to put critical loops in safe states: manual mode where appropriate, interlocks verified, and any energy or material flows put into a safe configuration. In a DCS, even a partial loss of visibility can create human-factor risks. IDS Power stresses the importance of operator training and clear procedures because a stressed operator misinterpreting screens can make a bad technical fault much worse.

Once the process is safe, the focus shifts to symptom gathering. Jiwei recommends starting from what you can observe: sluggish operator stations, frozen screens, frequent alarms, missing device status, or loops that do not respond to commands. In practice, this includes checking whether the issue is limited to a single controller or area, reviewing which alarms appeared first, and confirming whether any recent maintenance, configuration changes, or power disturbances occurred.

Next comes the split between hardware and software. On the hardware side, engineers perform physical inspections and electrical measurements using tools such as multimeters and oscilloscopes to verify controller power rails, I/O signal levels, and network status. Jiwei emphasizes simple but often overlooked checks for loose, damaged, or miswired cables, overheated modules, and dirty or corroded terminals. Schneider ElectricŌĆÖs DCS repair guidance aligns with this focus on high-risk components, noting that many failures occur in I/O and power modules and that repairs systematically replace aged or historically weak components, not only those that have visibly failed.

On the software side, logs and diagnostics are critical. Jiwei and IDS both highlight system log analysis as a key diagnostic method for tracing configuration errors, communication timeouts, and software crashes that may not show up in the hardware. DeltaV optimization practices reinforce this, encouraging regular use of built-in diagnostics and trend tools to catch subtle problems early. During urgent support, engineers use those same tools to answer practical questions: Did the controller reboot unexpectedly? Did a configuration download fail? Is one network segment experiencing high latency or packet loss?

When there is still doubt, a controlled swap test is often used. Jiwei describes the module replacement method: swapping a suspect controller or I/O module with a known-good spare to see whether the fault follows the hardware. In a well-prepared plant, spare controllers or I/O slices are preconfigured or can be quickly loaded from backups, significantly shortening this step.

The last stage is verification. Competent repair providers such as ICS, AES, and NJT Automation do not consider a unit repaired until it has passed full functional testing under load conditions in a test stand. In the field, that philosophy translates into more than just clearing alarms. Engineers confirm that all loops are back in automatic, that critical sequences run to completion, that data is logged correctly to historians, and that alarm rates have returned to normal. Only then does the incident truly end.

The fastest urgent repair calls are the ones where the plant has quietly done its homework. Several industry bodies and practitioners stress that DCS reliability is built on ongoing maintenance, not occasional heroics.

Automation Community outlines a continuous maintenance program for DCS, including regular inspections, planned software and firmware updates, rigorous backup and recovery testing, preventive mechanical and electrical care, and structured training. When this discipline is in place, urgent repairs start on second base. Panel wiring is documented, recent changes are known, and backup images actually restore because they have been tested, not just assumed to be good.

Backups are particularly important. DeltaV best practices recommend routine system backups on a defined schedule, with periodic restore tests to prove that recovery works in practice and not just on paper. That means backing up controller configuration, control logic, graphics, and historical data, then occasionally restoring them to a test system or a spare node to ensure that the process is repeatable. JiweiŌĆÖs troubleshooting guidance also calls out the need to restore known-good configurations when software faults are suspected. During an urgent repair, a clean backup can be the fastest way to get a new or repaired controller running.

Environmental control is another quiet contributor to uptime. Jiwei warns that extreme temperatures, humidity, and electromagnetic interference can accelerate failure. In real plants this translates into habits such as keeping control rooms cool and clean, ensuring cabinets are sealed and filtered, keeping high-voltage or high-noise equipment physically separated from sensitive control electronics, and using proper grounding and shielding.

Maintenance is not just about the hardware and software; it also includes the people. Automation Community and IDS both emphasize operator and technician training. Staff who understand the DCS architecture, know how to interpret alarms, and are familiar with manual modes and safe states help shorten both diagnosis and recovery. Many DeltaV users, for example, leverage simulations not only to test logic before deployment but also to train operators in realistic scenarios without putting the real plant at risk.

Finally, a Computerized Maintenance Management System, as described by Mapcon, reinforces all this work by managing preventive maintenance schedules, spare parts, work orders, and downtime metrics. Integrating DCS assets into a CMMS means that recurring problems are visible across shifts and years, and the data is available to justify upgrades or to refine maintenance intervals before something fails at the worst possible time.

When production is down, there is always a temptation to bypass cybersecurity controls ŌĆ£just this onceŌĆØ to let someone connect quickly. In the current threat landscape, that is a dangerous reflex.

The ISA Global Cybersecurity Alliance highlights that DCS cybersecurity must cover both IT and OT, and recommends aligning with standard ANSI/ISAŌĆæ62443ŌĆæ3ŌĆæ3. The first step, according to that standard, is a clear asset inventory and risk assessment; only when you know what you have and how it is segmented can you safely expose anything for remote support. ISAŌĆÖs zones and conduits model promotes segmenting critical control assets away from less trusted networks, which both limits the blast radius of any incident and provides a clear place where remote connections should terminate.

Service providers like Industrial Control Solutions and MDI Advantage show what secure remote support can look like in practice. ICS designs communication modules that give their engineers access to the control equipment without exposing the broader corporate network. MDI Advantage assists customers in setting up secure connections without requiring a preexisting complex VPN, while still maintaining appropriate separation. These approaches align with ISAŌĆÖs advice: design remote access as part of a layered defense, not as an emergency shortcut.

From an operational perspective, this means a few concrete things. Remote access paths should be defined, documented, and tested before an incident. Accounts and roles must be tightly controlled and kept up to date, a point ISA emphasizes in the context of workforce turnover and dormant accounts. Security patches and antivirus for systems that can accept them should be kept current, as IDS notes, but without jeopardizing real-time control. And when a critical outage occurs, the team should follow a predefined procedure to grant, monitor, and revoke external access rather than improvising under pressure.

Handled correctly, cybersecurity and urgent repair support each other. Secure, reliable remote access can significantly shorten time to resolution, but only if it is engineered deliberately, not bolted on during a crisis.

During a controller failure, someone inevitably asks whether it is time to replace the whole system. With mixed-vintage equipment, that is often a fair question. IDS points out that many plants run modern controllers alongside obsolete operating systems or legacy DCS platforms, which can create uncomfortable reliability and security risks. Repair, however, remains a powerful tool, especially when supported by specialized service providers.

Schneider ElectricŌĆÖs industrial repair services demonstrate how repair can keep legacy DCS platforms, such as older Westinghouse or Bailey systems, alive by remanufacturing and refurbishing modules instead of forcing a rip-and-replace migration. AES and NJT Automation similarly position repair as a way to avoid the high cost and disruption of full replacement, especially when the controller platform is still fundamentally sound but individual components have failed.

A useful way to think about your options in an emergency is summarized below.

| Strategy | Best suited for | Advantages | Limitations |

|---|---|---|---|

| Urgent repair of failed controller or module | Platform is still supported or repairable; downtime cost is high but tolerable for short outages | Fastest practical recovery; preserves existing control logic and operator training; often significantly cheaper than replacement | May not address deeper obsolescence or cybersecurity gaps; dependent on availability of spares and repair expertise |

| Like-for-like replacement of controller or rack | Hardware is commercially available and configuration backups are reliable | Refreshes hardware while keeping existing strategy; can reduce failure risk if old units were degraded | Requires careful configuration transfer and testing; limited benefit if underlying design is outdated |

| Planned migration or upgrade of DCS | System is nearing end of life, lacking vendor support, or cannot meet new requirements | Addresses obsolescence, scalability, and security in a structured project; can improve diagnostics and efficiency | Requires careful engineering studies and typically planned shutdowns; not a quick fix for an urgent failure |

The Control.com engineering community advises that when upgrading DCSs, plants often benefit from a mix of short, planned shutdowns in limited areas and hot cutover for critical loops. The same thinking applies during urgent repairs: sometimes a very short, well-planned outage in a specific unit is safer and more efficient than trying to maintain illusory ŌĆ£zero downtimeŌĆØ while swapping critical controllers.

The key is to separate the urgent decision from the strategic one. Use repair and like-for-like replacement to get safely back in operation first. Then use data from your CMMS, incident logs, and risk assessments to decide whether a structured migration is the right next step.

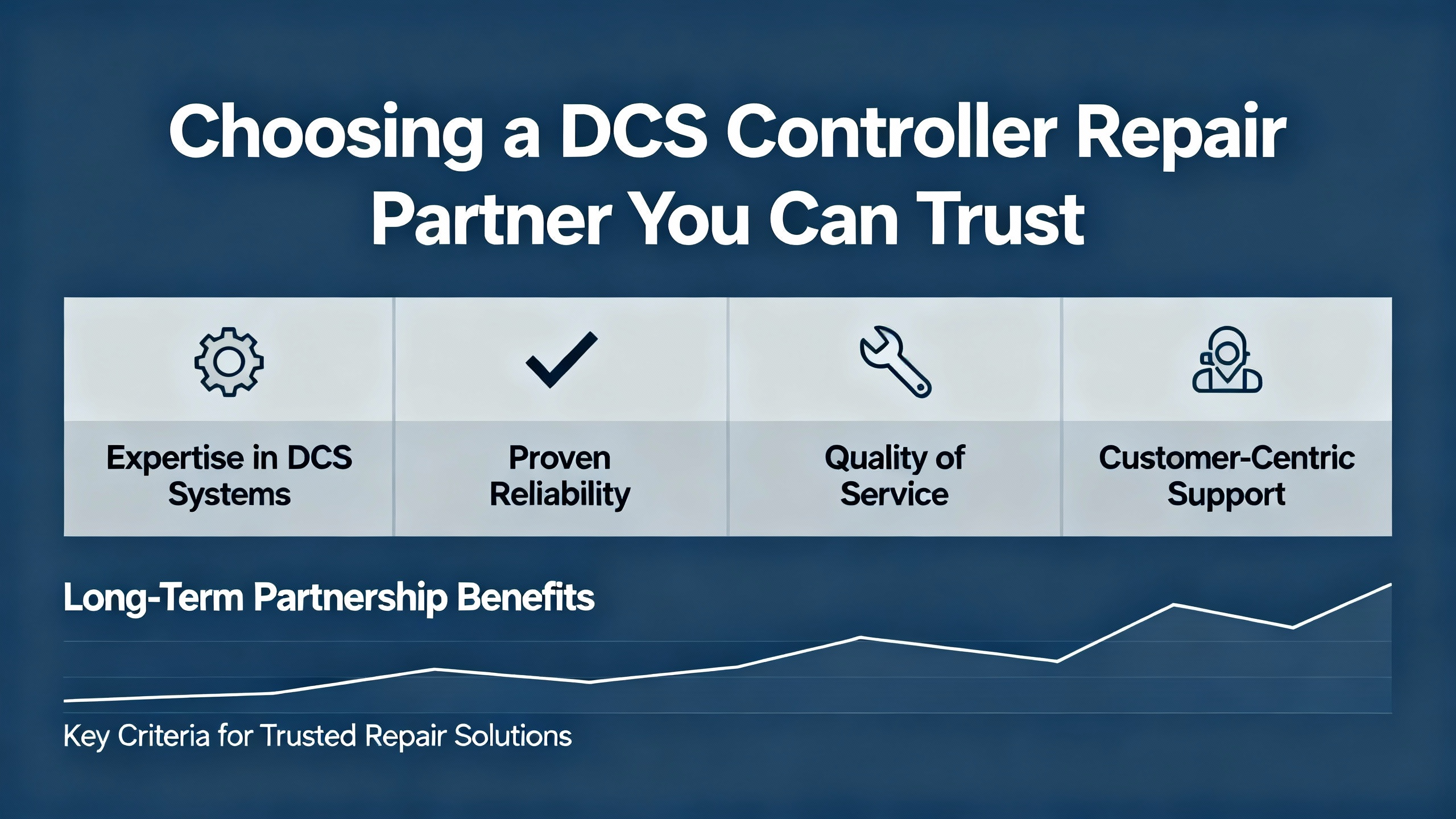

Not all repair shops are created equal, especially when it comes to DCS controllers and associated modules. Selecting the right partner before you need them is one of the most effective ways to reduce downtime.

Experience with industrial controls is non-negotiable. AES, for instance, highlights more than three decades of industrial electronics repair, with detailed processes for inspecting every input, output, connector, and moving part, and for replacing high-failure components such as relays and capacitors. NJT Automation operates a distributed network of specialists who focus on specific families of PLCs, HMIs, drives, and controllers rather than treating all hardware the same. This specialization matters because subtle, vendor-specific quirks often determine whether a repair holds up under real plant conditions.

Testing philosophy is another critical differentiator. ICS, AES, and others stress that they load test every repaired unit, running it under operational loads to verify performance. Schneider Electric describes using both static and dynamic testing and proactively replacing at-risk parts based on a large repair-history database. From a DCS perspective, that kind of rigor means a lower chance of the ŌĆ£repairedŌĆØ controller failing again as soon as it is back in a hot cabinet.

Warranties provide a visible signal of confidence. ICS offers a twelve-month warranty. NJT Automation and Schneider Electric provide one-year coverage on repaired DCS modules. AES offers a twenty-four month limited warranty on workmanship-related failures. While the fine print differs, a repair partner willing to stand behind their work for a year or more is usually investing in quality components, proper processes, and skilled technicians.

Responsiveness and communication also matter. Kredit Automation & Controls and MDI Advantage both describe support models where highly trained engineers can be dispatched nationwide or connect remotely, with initial troubleshooting starting over the phone as soon as you call. Powergenics shares a testimonial where engineers organized a conference call outside normal business hours to walk a customer through repairing a controller card, providing a level of technical guidance the original equipment manufacturer did not match. Those behaviors are what you want during an urgent DCS controller incident: clear, direct communication, and engineers who speak the language of loops, networks, and interlocks.

When you evaluate providers, ask practical questions: which DCS and PLC platforms do they see regularly, how do they test repaired controllers and I/O, what is their standard turnaround time, what are their emergency options, how long is the warranty, and whether they have experience with your industryŌĆÖs environmental and regulatory demands. A good partner will answer specifically, not with vague assurances.

There are a few pieces of information and preparation that consistently make urgent DCS controller repair faster and safer. Having them ready before you place the call makes a real difference.

At minimum, you should be able to describe the symptoms clearly. That includes which areas of the plant are affected, what the operators see on the screens, whether the issue started after a particular event such as a power dip, a configuration change, or maintenance work, and whether redundancy has behaved as expected. If you can capture screenshots or alarm summaries, do so.

Configuration and backup status is equally important. Know where your DCS backups live, when they were last run, and whether restore procedures have been tested. If your plant uses a CMMS or historian, being able to share relevant trends and work history helps engineers understand whether the failure is truly sudden or the end of a long degradation.

From an access perspective, coordinate ahead of time how remote support will be granted if needed, who can authorize changes, and how to bring external engineers on site with the right safety orientation and plant escorts. Combining this with a clear escalation tree avoids delays while people search for permissions during a crisis.

These are simple things, but in real plants they are often the difference between a two-hour interruption and a fifteen-hour ordeal.

A question I often hear is whether it is safe to run temporarily on a single controller if redundancy has been lost. The honest answer is that it depends on your risk assessment and process-criticality. Many DCS designs tolerate brief periods on a single controller, but you are accepting reduced fault tolerance. The key is to document the condition, understand what protections remain, and schedule repair or replacement as soon as practical rather than treating the situation as the new normal.

Another common concern is how often DCS backups should be run. Industry guidance from Automation Community and DeltaV practices suggests that the frequency should match the rate of change in your system. Facilities with frequent configuration updates may back up daily or weekly, while more static plants may choose longer intervals. Whatever the interval, regular restore tests are at least as important as the backups themselves.

Plant teams also ask when it is time to stop repairing an aging DCS and plan a migration. Signals include growing difficulty finding spare parts, lack of vendor support, mounting cybersecurity gaps, and repeated incidents tied to legacy components. IDS recommends front-end engineering studies and obsolescence assessments to make this decision based on data rather than frustration during an outage.

Finally, people wonder whether third-party repair shops void OEM support. In practice, policies vary by vendor and contract. Some OEMs support mixed models where certain modules can be repaired by specialists while core components remain under their own service programs. The safest path is to clarify this in writing with both your OEM and your chosen repair partner before relying on third-party repairs for critical equipment.

In a high-value continuous process, urgent DCS controller repair is less about heroics and more about preparation, partnerships, and disciplined troubleshooting. If you invest in solid maintenance practices, backups, secure remote access, and relationships with repair specialists who know your hardware, then the next controller failure will still be stressful, but it will not be chaotic. When the alarms start ringing, you will have a clear plan, the right people to call, and a path to get your plant safely back in automatic in the shortest possible time.

Leave Your Comment